Updating the lane-keeping AI to a lane-changing AI proved to largely be a lesson in keeping things as simple as possible.

With the original lane-keeping AI, the model was able to learn to remain in its current lane, and to not touch the pink road divider.

It learnt this effectively enough that the model would complete tens of thousands of training steps without receiving a reward, as the car would never crash.

However, when I updated this same environment to allow the car to receive an input to change lanes, the model was unable to learn this behaviour. Whether I trained a new model from scratch, or taught the model step by step via curriculum learning, the model was simply unable to learn the lane-changing behaviour.

After a great deal of thought, I eventually realised the potential cause, and a simultaneous solution. As is often the cause of difficulties in model training with reinforcement learning, the data was too complex for the model to understand.

(I prefer to think of data in terms of complexity and simplicity, rather than in terms of noise and cleanness.)

In terms of the lane-changing, the model was expected to have an awareness of the lane it was currently driving in, the other lane, and the road divider. With machine learning, each additional feature the AI is expected to keep track of adds an exponential increase in complexity of potential scenarios the model will need to be trained for. In this case, the complexity was great enough that the model was simply unable to interpret the data correctly, even with the incredibly apt PPO algorithm.

The solution was in deleting the road divider and the other lane. Following the Tesla approach, I realised, much like I had done with the original lane-keeping AI, the AI does not need an awareness of the surrounding world.

Extended to the lane-changing AI, this meant that the model did not need an awareness of the other lane, or even the road divider. It simply needed to know which lane it should currently be driving in.

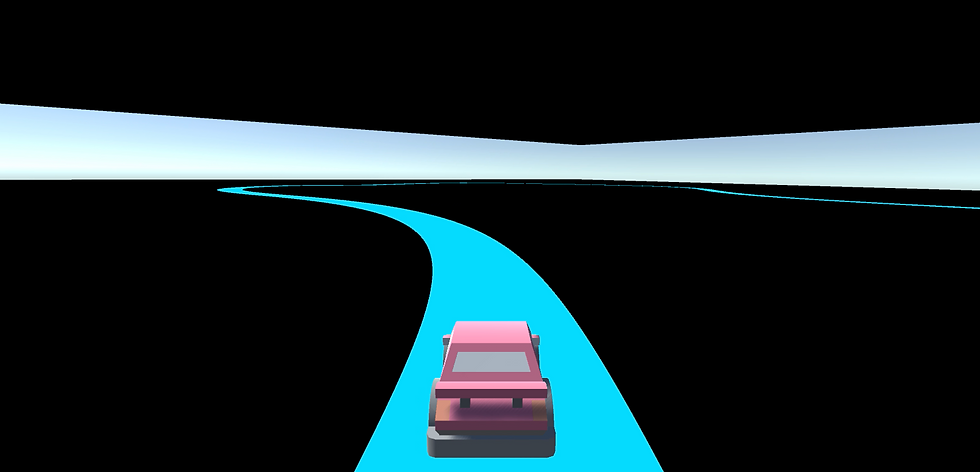

Hence, I updated the environment to simply highlight the current target lane in blue, and to leave everything else as black. The road divider was deleted entirely.

When this simpler environment was implemented, something fascinating happened. When testing this new model, I removed the lane-changing functionality, and simply trained it to lane-keep. When the model trained successfully to do this in the new environment, I activated the lane-changing functionality, and resumed the training. However, the data was now simple enough that the car instantly had an awareness of how to change lanes.

Further training simply improved the accuracy and efficiency with which it changed lanes, but the model was able to transfer from lane-keeping to lane-changing instantly.

This was an immensely valuable lesson not only in the benefit of simple data for training a model, but also in allowing a model to be retrained for other functions when that simplified data is used in an efficient manner.

Comments